Farewell EC2-Classic, it’s been swell

Retiring services isn’t something we do at AWS. It’s quite rare. Companies rely on our offerings – their businesses literally live on these services – and it’s something that we take seriously. For example SimpleDB is still around, even though DynamoDB is the “NoSQL” DB of choice for our customers.

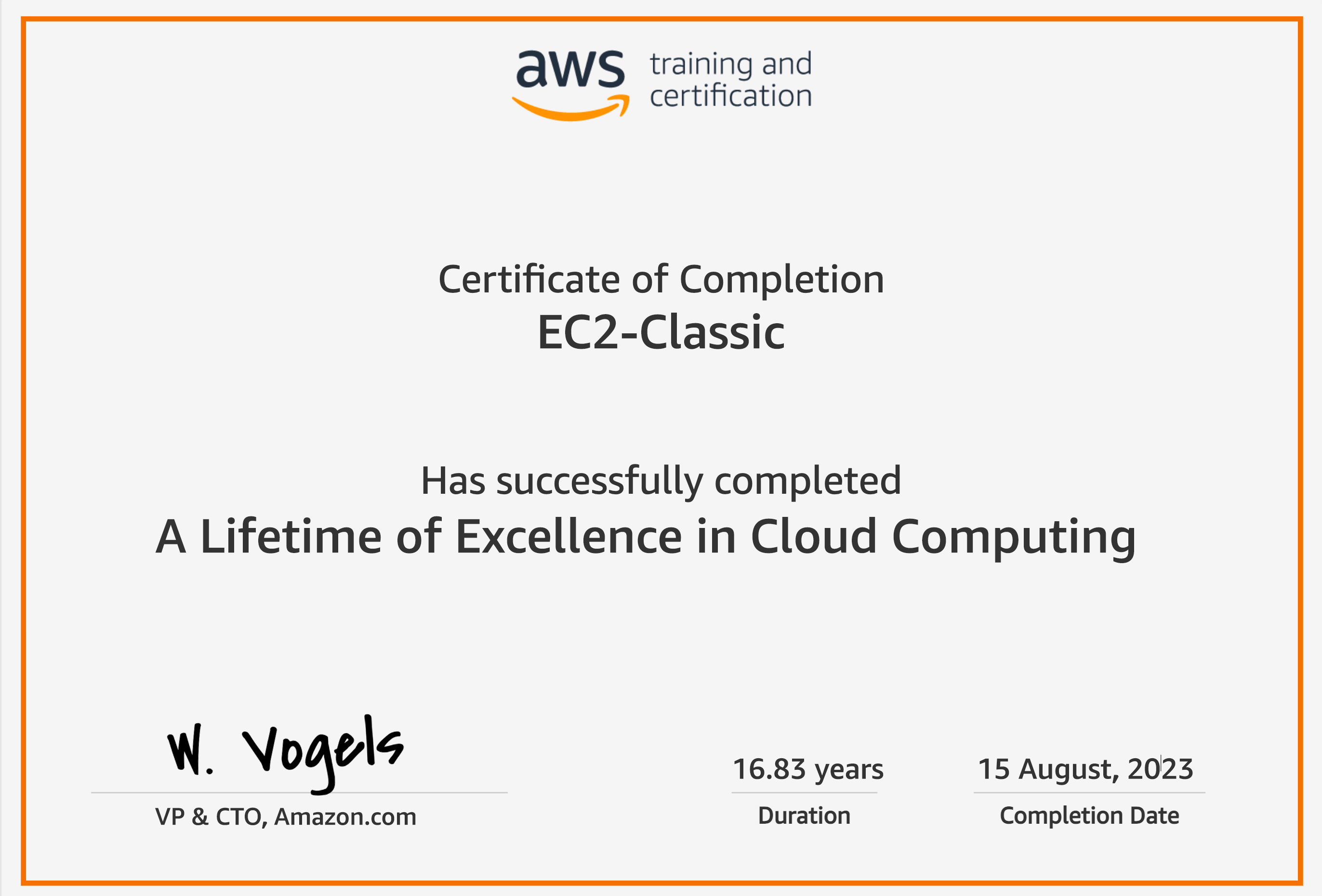

So, two years ago, when Jeff Barr announced that we’d be shutting down EC2-Classic, I’m sure that there were at least a few of you that didn’t believe we’d actually flip the switch — that we’d let it run forever. Well, that day has come. On August 15, 2023, we shut down the last instance of Classic. And with all of the history here, I think it’s worth celebrating the original version of one of the services that started what we now know as cloud computing.

EC2 has been around for quite a while, almost 17 years. Only SQS and S3 are older. So, I wouldn’t blame you if you were wondering what makes an EC2 instance “Classic”. Put simply, it’s the network architecture. When we launched EC2 in 2006, it was one giant network of 10.0.0.0/8. All instances ran on a single, flat network shared with other customers. It exposed a handful of features, like security groups and Public IP addresses that were assigned when an instance was spun up. Classic made the process of acquiring compute dead simple, even though the stack running behind the scenes was incredibly complex. “Invent and Simplify” is one of the Amazon Leadership Principles after all…

If you had launched an instance in 2006, an m1.small, you would have gotten a virtual CPU the equivalent of a 1.7 GHz Xeon processor with 1.75 GB of RAM, 160 GB of local disk, and 250 Mb/second of network bandwidth. And it would have cost just $0.10 per clocked hour. It’s quite incredible where cloud computing has gone since then, with a P3dn.24xlarge providing 100 Gbps of network throughput, 96 vCPUs, 8 NVIDIA v100 Tensor Core GPUs with 32 GiB of memory each, 768 GiB of total systems memory, and 1.8 TB of local SSD storage, not to mention an EFA to accelerate ML workloads.

But 2006 was a different time, and that flat network and small collection of instances, like the m1.small, was “Classic”. And at the time it was truly revolutionary. Hardware had become a programmable resource that you could scale up or down at a moment’s notice. Every developer, entrepreneur, startup and enterprise, now had access to as much compute as they wanted, whenever they wanted it. The complexities of managing infrastructure, buying new hardware, upgrading software, replacing failed disks — had been abstracted away. And it changed the way we all designed and built applications.

Of course the first thing I did when EC2 was launched was to move this blog to an m1.small. It was running Moveable Type and the this instance was good enough to run the server and the local (no RDS yet) database. Eventually I turned it into a highly-available service with RDS failover, etc., and it ran there for 5+ years until the Amazon S3 Website feature was released in 2011. The blog has now been “serverless” for the past 12 years.

Like we do with all of our services, we listened to what our customers needed next. This led us to adding features like Elastic IP addresses, Auto Scaling, Load Balancing, CloudWatch, and various new instance types that would better suit different workloads. By 2013 we had enabled VPC, which allowed each AWS customer to manage their own slice of the cloud, secure, isolated, and defined for their business. And it became the new standard. It simply gave customers a new level of control that enabled them to build even more comprehensive systems in the cloud.

We continued to support Classic for the next decade, even as EC2 evolved and we implemented an entirely new virtualization platform, Nitro — because our customers were using it.

Ten years ago, during my 2013 keynote at re:Invent, I told you that we wanted to “support today’s workloads as well as tomorrow’s,” and our commitment to Classic is the best evidence of that. It’s not lost on me, the amount of work that goes into an effort like this — but it is exactly this type of work that builds trust, and I’m proud of the way it has been handled. To me, this embodies what it means to be customer obsessed. The EC2 team kept Classic running (and running well) until every instance was shut down or migrated. Providing documentation, tools, and support from engineering and account management teams throughout the process.

It’s bittersweet to say goodbye to one of our original offerings. But we’ve come a long way since 2006 and we’re not done innovating for our customers. It’s a reminder that building evolvable systems is a strategy, and revisiting your architectures with an open mind is a must. So, farewell Classic, it’s been swell. Long live EC2.

Now, go build!

Recommended posts