Bringing Digital People to life with autonomous animation

Working with computers is nothing new, we have been doing it for more than 150 years. In all of that time, one thing has remained constant — all of our interfaces have been driven by the capabilities (and limitations) of the machine. Sure, we have come a long way from looms and punch cards, but monitors, keyboards, and touchscreens are far from natural. We use them, not because they are easy or intuitive, but because we are forced to.

When Alexa launched, it was a big step forward. It proved that voice was a viable, and more equitable way for people to converse with computers. In the past few months, we have seen an explosion of interest in large language models (LLMs) for their ability to synthesize and present information in a way that feels convincing — even human-like. As we find ourselves spending more time talking with machines than we do face-to-face, the popularity of these technologies show that there is an appetite for interfaces that feel more like a conversation with another person. But what’s still missing is the connection established with visual and non-verbal cues. The folks at Soul Machines believe that their Digital People can fill this void.

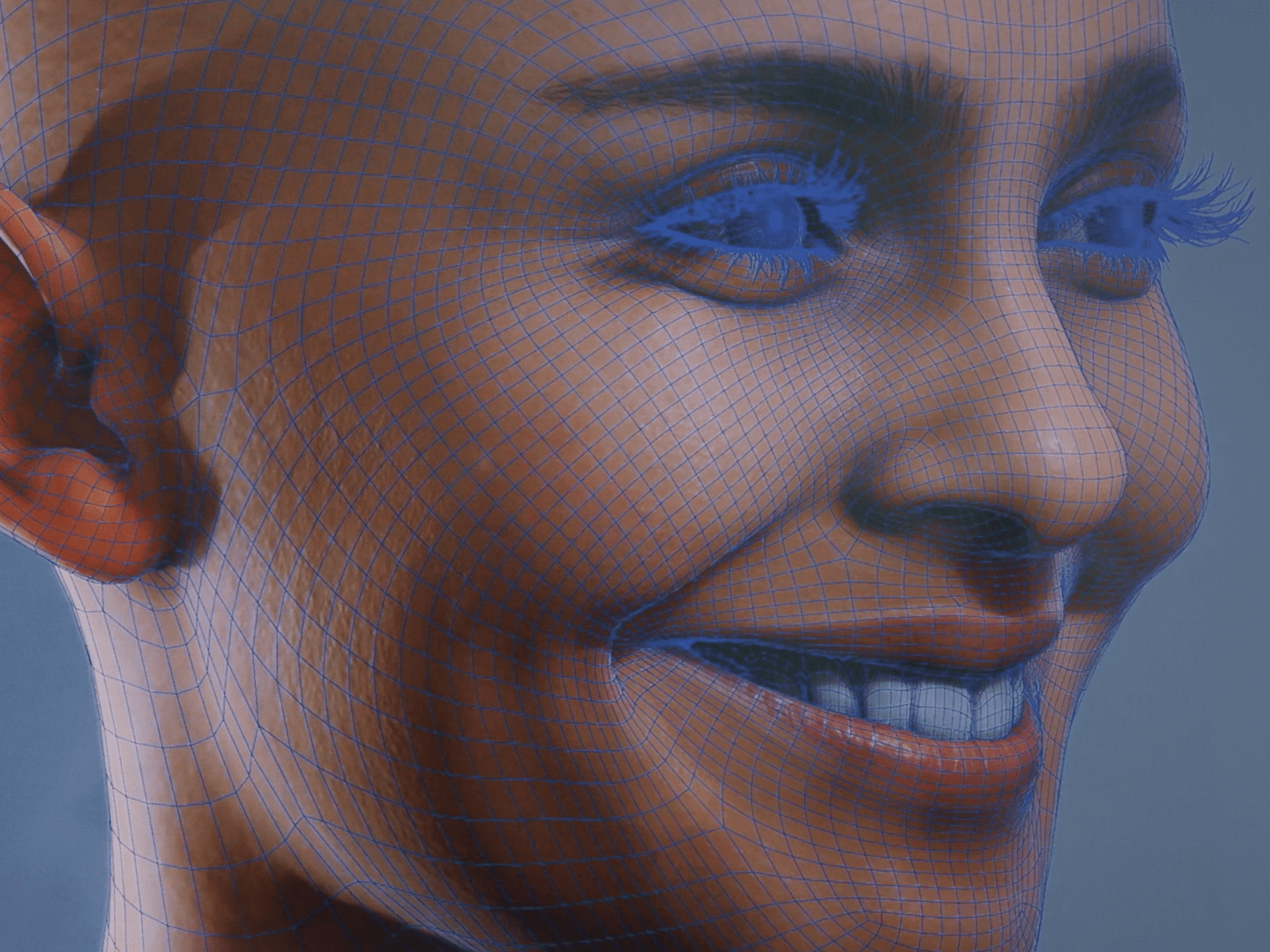

It all starts with CGI. For decades, Hollywood has used this technology to bring digital characters to life. When done well, humans and their CGI counterparts seamlessly share the screen, interacting with each other and reacting in ways that truly feel natural. Soul Machines’ co-founders have a lot of experience in this area. In the past, winning award for facial animation work for films, such as King Kong and Avatar. However, creating and animating realistic digital characters is incredibly expensive, labor intensive, and ultimately, not interactive. It doesn’t scale.

Soul Machines’ solution is autonomous animation.

At a high-level, there are two parts that make this possible: the Digital DNA Studio, which allows end users to create highly-realistic synthetic people; and an operating system, called Human OS, which houses their patented Digital Brain, giving Digital People the ability to sense and perceive what is going on in their environment and react and animate accordingly in real-time.

Embodiment is the goal — making the interface feel more human. It helps to build a connection with end users and it is what they believe differentiates Digital People from chatbots. But, as their VP of Special Products, Holly Peck, puts it: “It only works, and it only looks right, when you can animate those individual digital muscles.”

To achieve this, you need extremely realistic 3D models. But how do you create a unique person that doesn’t exist in the real world? The answer is photogrammetry (which I spoke about a bit at re:Invent). Soul Machines starts by scanning a real person. Then they do the hard work of annotating every physiological muscle contraction in that person’s face before feeding it to a machine learning model. Now repeat that hundreds of times and you wind up with a set of components that can be used to create unique Digital People. As I’m sure you can imagine, this produces a tremendous amount of data — roughly 2-3 TBs per scan — but it is integral to the normalization process. It ensures that whenever a digital person is autonomously animated, regardless of the components used to create them, that every expression and gesture feels genuine.

The Digital Brain is what brings this all to life. In some ways, it works similarly to Alexa. A voice interaction is streamed to the cloud and converted to text. Using NLP, the text is processed into an intent and routed to the appropriate subroutine. Then, Alexa streams a response back to the user. However, with Digital People, there’s an additional input and output: video. Video input is what allows each digital person to observe subtle nuances that aren’t detectable in speech alone; and video output is what enables them to react in emotive ways, in real-time, such as with a smile. It’s more than putting a face on a chatbot, it’s autonomously animating each muscle contraction in a digital person’s face to help facilitate what they call “a return on empathy.”

From processing to rendering to streaming video — it all happens in the cloud.

We are progressing towards a future where virtual assistants can do more than just answer questions. A future where they can proactively help us. Imagine using a digital person to augment check-ins for medical appointments. With awareness of previous visits, there would be no need for repetitive or redundant questions, and with visual capabilities, these assistants could monitor a patient for symptoms or indicators of physical and cognitive decline. This means that medical professionals could spend more time on care, and less time collecting data. Education is another excellent use case. For example, learning a new language. A digital person could augment a lesson in ways that a teacher or recorded video can’t. It opens up the possibility of judgment free 1:1 education. Where a digital person could interact with a student with infinite patience — evaluating and providing guidance on everything from vocabulary to pronunciation in real-time.

By combining biology with digital technologies, Soul Machines is asking the question: what if we went back to a more natural interface. In my eyes, this has the potential to unlock digital systems for everyone in the world. The opportunities are vast.

Now, go build!