Dynamic Content Support in Amazon CloudFront

In the past three and a half years, Amazon CloudFront has changed the content delivery landscape. It has demonstrated that a CDN does not have to be complex to use with expensive contracts, minimum commits, or upfront fees, such that you are forcibly locked into a single vendor for a long time. CloudFront is simple, fast and reliable with the usual pay-as-you-go model. With just one click you can enable content to be distributed to the customer with low latency and high-reliability.

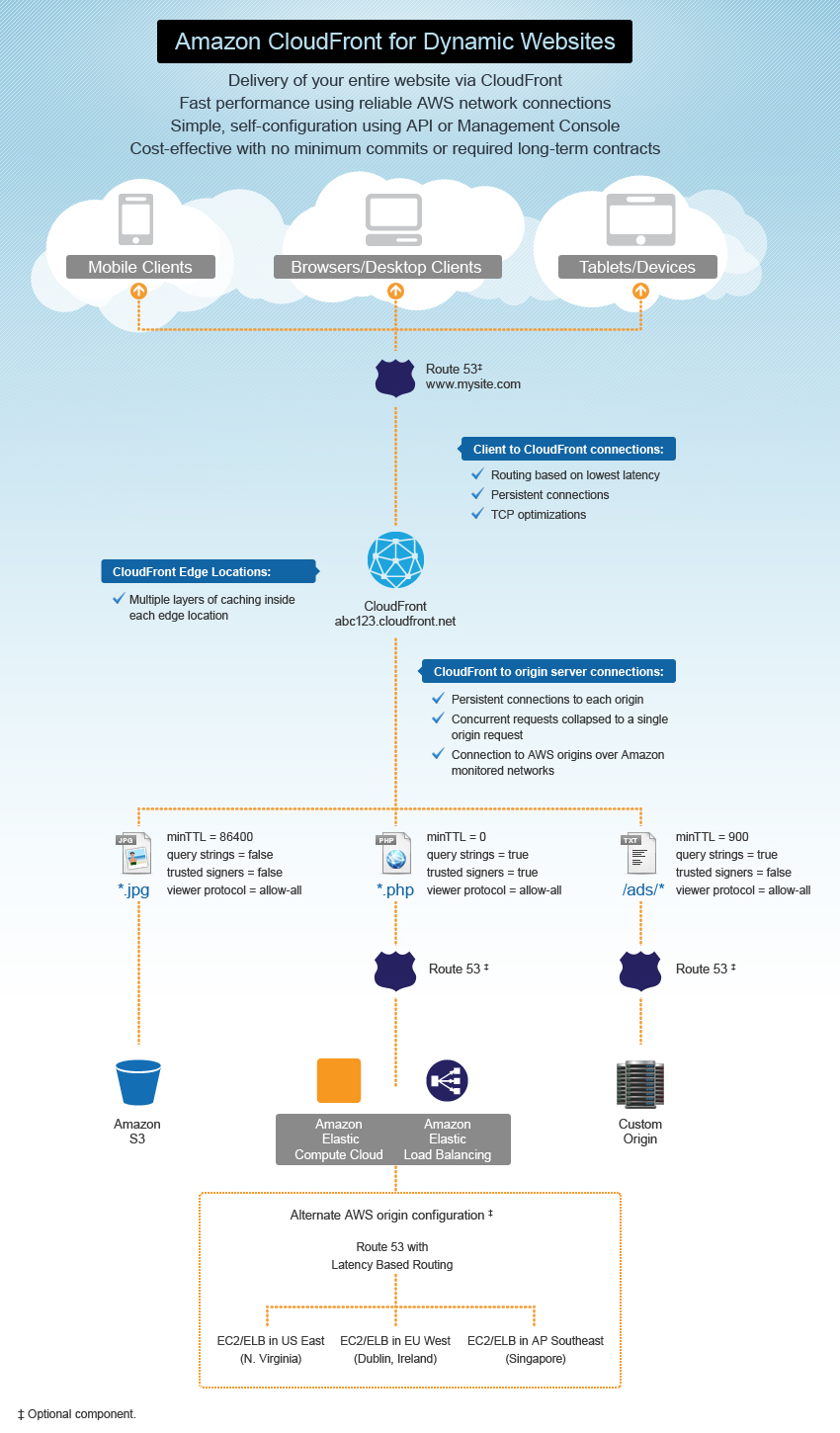

Today Amazon CloudFront has taken another major step forward in ease of use. It now supports delivery of entire websites containing both static objects and dynamic content. With these features CloudFront makes it as simple as possible for customers to use CloudFront to speed up delivery of their entire dynamic website running in Amazon EC2/ELB (or third-party origins), without needing to worry about which URLs should point to CloudFront and which ones should go directly to the origin.

Dynamic Content Support

Recall that last month the CloudFront team announced lowering the minTTL customers can set on their objects, down to as low as 0 seconds to support delivery of dynamic content. In addition to the TTLs, customers also need some other features to deliver dynamic websites through CloudFront. The first set of features that CloudFront is launching today include:

Multiple Origin Servers: the ability to specify multiple origin servers, including a default origin, for a CloudFront download distribution. This is useful when customers want to use different origin servers for different types of content. For example, an Amazon S3 bucket can be used as the origin for static objects and an Amazon EC2 instance as the origin for dynamic content, all fronted by the same CloudFront distribution domain name. Of course non-AWS origins are also permitted.

Query String based Caching: the ability to include query string parameters as part of the object’s cache key. Customers will have a switch to turn query strings ‘on’ or ‘off’. When turned off, CloudFront’s behavior will be the same as today - i.e., CloudFront will not pass the query string to the origin server nor include query string parameters as a part of the object’s cache key. And when query strings are turned on, CloudFront will pass the full URL (including the query string) to the origin server and also use the full URL to uniquely identify an object in the cache.

URL based configuration: the ability to configure cache behaviors based on URL path patterns. Each URL path pattern will include a set of cache behaviors associated with it. These cache behaviors include the target origin, a switch for query strings to be on/off, a list of trusted signers for private content, the viewer protocol policy, and the minTTL that CloudFront should apply for that URL path pattern. See the graphic at the end of this post for an example configuration.

More new features

In addition to these features, there are other things the CloudFront team has achieved to speed up delivery of content, but all customer will get these benefits by default without additional configuration. These performance optimizations are available for all types of content (static and dynamic) delivered via CloudFront. Specifically:

Optimal TCP Windows. The TCP initcwnd has been increased for all CloudFront hosts to maximize the available bandwidth between the edge and the viewer. This is in addition to the existing optimizations of routing viewers to the edge location with lowest latency for that user, and also persistent connections with the clients.

Persistent Connection to Origins. Connections are improved from CloudFront edge locations to the origins by maintaining long-lived persistent connections. This helps by reducing the connection set-up time from the edge to the origin for each new viewer. When the viewer is far away from the origin, this is even more helpful in minimizing total latency between the viewer and the origin.

Selecting the best AWS region for Origin Fetch. When customers run their origins in AWS, we expect that our network paths from each CloudFront edge to the various AWS Regions will perform better with less packet loss given that we monitor and optimize these network paths for availability and performance. In addition, we have shown an optional configuration in the architecture diagram how developers can use Route 53’s LBR (Latency Based Routing) to run their origin servers in different AWS Regions. Each CloudFront edge location will then go to the “best” AWS Region for the origin fetch. And Route 53 already understands very well which CloudFront host is in which edge location (this is integration we’ve built between the two services). This helps improve performance even further.

Amazon CloudFront is expanding it functionality and feature set at an incredible pace. I am particularly excited about these features that help customers deliver both static and dynamic content through one distribution. CloudFront stays true to its mission in making a Content Delivery Network dead simple to use, and now they also do this for dynamic content.

For more details, see the CloudFront detail page and the posting on the AWS developer blog.